Is AI Really Destroying the Planet? Here’s What We Found Out

I saw a post the other day with one of those classic AI is ruining the world headlines - designed to spark outrage and get clicks. Normally, I’d roll my eyes, but this one stuck with me. It wasn’t just clickbait; it raised a real concern about how much carbon emissions I am creating each and every time I use chatGPT.

In a recent group meeting, some colleagues and I started discussing it, but when it came to the actual environmental impact of AI - how much energy it uses, how much water it consumes - no one had concrete numbers. Just a lot of “It’s massive,” “It’s bad,” and “It’s wasting water” statements.

I’m all for environmental responsibility, but I hate greenwashing and baseless fear-mongering. If we’re going to talk about the impact of AI, we need facts, not vague panic. So, I promised to dig into the numbers and find out what’s actually happening. One comment in particular stuck with me which didn't seem right: “AI wastes heaps of water.” But water is an infinite resource in a closed system, right? Like in nuclear reactors, where water is used for cooling and then recycled? Unless AI data centers are draining river systems like Australia’s cotton farms depleting the Murray Darling, wouldn’t the water just be used and condensed back again?

So, I went looking for real answers - with the help of ChatGPT, ironically - and here’s what I found…

Artificial intelligence (AI) technologies, such as OpenAI's ChatGPT, have become integral to various applications, from virtual assistants to content generation. However, their operation and maintenance involve significant energy consumption and carbon emissions, impacting the environment which we should all be aware about.

Energy Consumption and Carbon Emissions

Operating AI models requires substantial computational power, leading to notable energy consumption and associated carbon emissions. For instance, each interaction with ChatGPT consumes approximately 0.0025 kilowatt-hours (kWh) of electricity, resulting in about 4.32 grams of CO₂ emissions per query. Smartly.ai

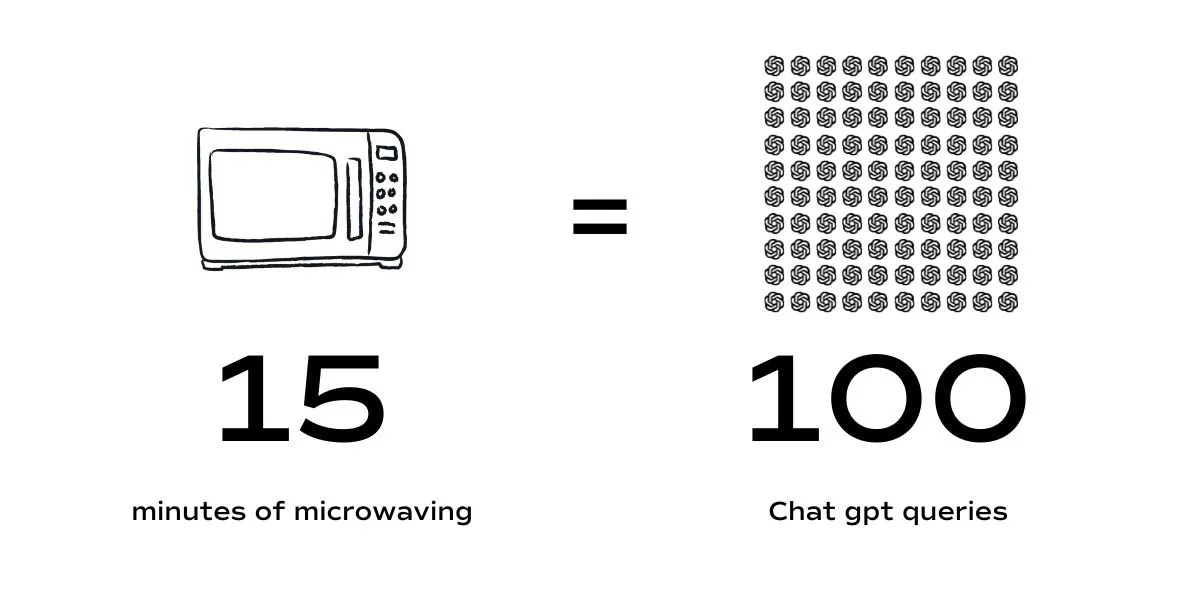

To put this into perspective, a user engaging with ChatGPT 100 times daily would consume around 0.25 kWh, equivalent to running a microwave oven for 15 minutes or powering a refrigerator for about five hours. While individual interactions may seem minimal, the cumulative effect across millions of users will significantly amplify the environmental impact.

Some basic stats.

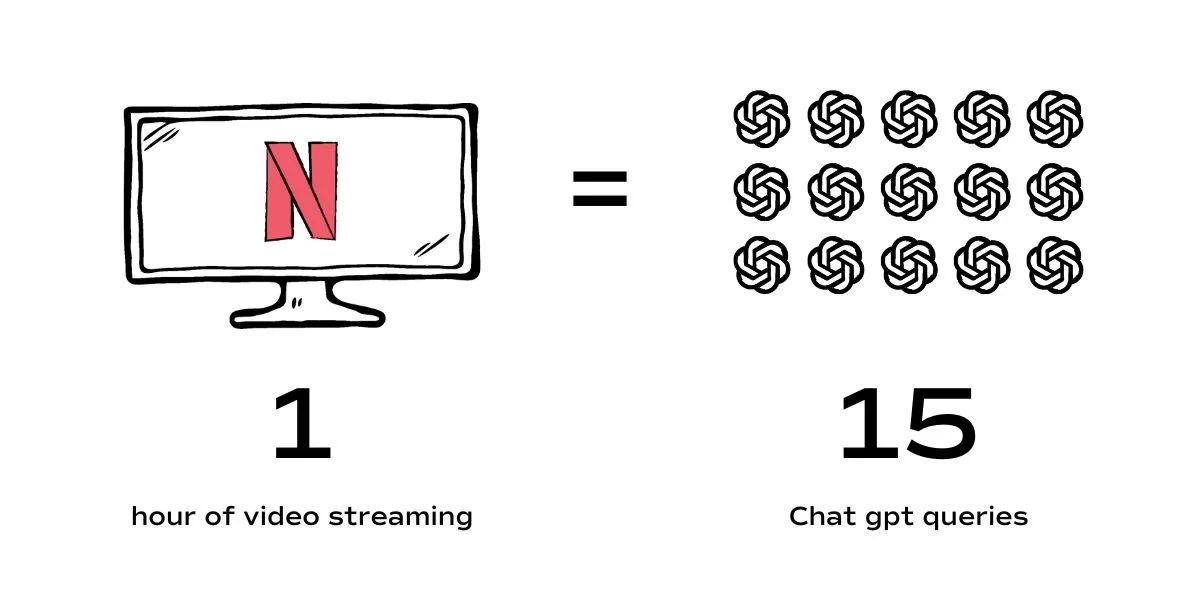

15 ChatGPT queries equate to the CO2 emissions of watching one hour of video streaming.

139 queries are roughly equivalent to the emissions from one load of laundry washed and dried on a clothesline.

42,000 queries equate to a one way flight between Sydney and Melbourne

Training large AI models also requires substantial computational power, leading to significant electricity consumption. For instance, developing OpenAI's GPT-3 model consumed approximately 1,287 megawatt-hours of electricity, resulting in 552 tons of carbon dioxide emissions - equivalent to the annual emissions of 123 gasoline-powered cars. Wikipedia

Data Center Operations

The infrastructure supporting AI models comprises extensive data centers that require continuous power for operation and cooling. In 2022, data centers consumed approximately 460 terawatt-hours (TWh) of electricity, accounting for about 2% of global electricity usage.

Projections indicate that by 2026, this consumption could double, equating to the current electricity usage of Japan. datacenterfrontier

OpenAI's plans to construct data centers with a combined capacity of 5 gigawatts (GW) underscore the escalating energy demands of AI technologies. Each 5 GW facility would consume power equivalent to that of five nuclear reactors, highlighting the substantial energy requirements for running and maintaining such centers.

New York PostArts Technica

Per-Prompt Energy Usage

On average, each ChatGPT query consumes about 0.0025 kWh of electricity. While this may appear negligible on an individual level, the aggregate energy consumption becomes significant when considering the vast number of daily interactions globally. DeTEAPOT

Environmental Considerations

The substantial energy consumption of AI technologies contributes to increased carbon emissions, primarily if the energy is sourced from fossil fuels. Additionally, the continuous operation of data centers necessitates robust cooling systems, often leading to considerable water usage, further impacting environmental resources, especially if water is scarce in the areas of operation.

Addressing these challenges requires a concerted effort to enhance the energy efficiency of AI models, transition data centers to renewable energy sources, and implement sustainable practices in AI development and deployment.

The Bottom Line

On an individual level, a single AI query might seem insignificant — but scale that across millions of users, 24/7 operations, and the immense power needed to train and run these models, and the environmental impact becomes staggering. The rise of AI is fueling a surge in energy demand, and unless data centers and governments prioritise sustainable solutions, we risk an even greater reliance on fossil fuels.

Researching this topic has made me acutely aware of my own energy footprint — not just in using tools like ChatGPT, but in all the small tech luxuries I rely on daily: streaming shows, running the fridge, tossing on a load of laundry. It all adds up. And if I’m one of billions doing the same, the collective impact is enormous.

It’s clearer than ever that for us to continue enjoying the incredible technological advancements we’ve built, we need to radically shift how we power them. That means breaking ties with coal-powered infrastructure and accelerating the global transition to renewable, environmentally sustainable energy sources.

The future of AI doesn’t just depend on technological breakthroughs — it depends on how we responsibly power it. Investing in clean energy, improving efficiency, and holding big tech accountable for greener operations isn’t just a “nice to have”. It’s essential.

Sources:

https://smartly.ai/blog/the-carbon-footprint-of-chatgpt-how-much-co2-does-a-query-generate

Ars Technica+2New York Post+2Reddit+2Ars Technica

https://deteapot.com/chatgpts-carbon-footprint-how-much-energy-does-your-ai-prompt-really-use

https://news.mit.edu/2025/explained-generative-ai-environmental-impact-0117

https://mit-genai.pubpub.org/pub/8ulgrckc/release/2

https://www.wired.com/story/true-cost-generative-ai-data-centers-energy?utm_source=chatgpt.com

LIKE THIS AND WANT MORE?

If this is the kind of content that speaks to you, follow us on Instagram. Or you can also sign up to our newsletter for weekly goodness delivered straight to your inbox.